Methodological notes

← vista completaPublished on October 28, 2022 | http://doi.org/10.5867/medwave.2022.09.2622

Systematic reviews: Key concepts for health professionals

Revisiones sistemáticas: conceptos clave para profesionales de la salud

Abstract

The exponential growth of currently available evidence has made it necessary to collect, filter, critically appraise, and synthesize biomedical information to keep up to date. In this sense, systematic reviews are a helpful tool and can be reliable sources to assist in evidence-based decision-making. Systematic reviews are defined as secondary research or syntheses of evidence focused on a specific question that -- based on a structured methodology -- make it possible to identify, select, critically appraise, and summarize findings from relevant studies. Systematic reviews have several potential advantages, such as minimizing biases or obtaining more accurate results. The reliability of the evidence presented in systematic reviews is determined, amongst other factors, by the quality of their methodology and the included studies. To conduct a systematic review, a series of steps must be followed: the formulation of a research question using the participants, interventions, comparisons, outcomes (PICO) format; an exhaustive literature search; the selection of relevant studies; the critical appraisal of the data obtained from the included studies; the synthesis of results, often using statistical methods (meta-analysis); and finally, estimating the certainty of the evidence for each outcome. In this methodological note, we will define the basic concepts of systematic reviews, their methods, and their limitations.

Main messages

- Systematic reviews are a form of evidence synthesis which can be reliable sources to assist evidence-based decision-making.

- Among the advantages of systematic reviews are the minimization of biases and increased accuracy of the results.

- They are guided by a specific question and a structured methodology that allows us to: identify, select, critically assess, and qualitatively or quantitatively synthesize (meta-analysis) the findings of individual studies.

- Some limitations may include poor quality elaboration, periodic outdating, quality of included studies (garbage-in/garbage-out), redundancy and duplication, the inclusion of problematic (potentially fraudulent) studies, and conflicts of interest.

Introduction

In the past decades, the amount of scientific evidence in the health field has grown surprisingly, with an estimated 75 clinical trials and 11 systematic reviews published daily [1]. This intensified during the COVID-19 pandemic, when the amount of evidence regarding the subject grew exponentially, having a rate of 1000 publications per week in PubMed [2].

This overwhelming amount of evidence makes it impossible to keep up to date without an adequate synthesis of biomedical information. This problem had already been identified decades ago by Archie Cochrane, who in 1979 raised the challenge of "having an organized and critical review, periodically updated for each specialty or subspecialty, of all relevant randomized controlled studies" [3]. Therefore, it was necessary to develop methods to collect, filter, and synthesize information. High-quality systematic reviews are one of the most reliable and widely used resources to assist evidence-based decision-making. It is essential to clarify that evidence-informed decision-making considers the certainty of the available evidence and the balance between harms and benefits (determined by the patient’s values and preferences), acceptability, feasibility, and costs, among other aspects [4].

This article is the eleventh of a series of methodological narrative reviews on general topics in biostatistics and clinical epidemiology, exploring and reviewing in a friendly language, published articles available in the main databases and specialized reference texts. The series is aimed at the education of undergraduate and graduate students. It is carried out by the Chair of Evidence-Based Medicine of the School of Medicine of the University of Valparaiso, Chile, in collaboration with the Research Department of the University Institute of the Hospital Italiano in Buenos Aires, Argentina, the Institute of General Medicine of the Heinrich Heine University in Düsseldorf, Germany, and the Centro Evidencia UC, of the Pontificia Universidad Católica, Chile.

This article explores and presents the most relevant topics on systematic reviews and the steps for conducting this type of study. It aims to be an aid in Spanish for students and health professionals without experience in systematic reviews. Our objectives are to define a systematic review, mention the main differences between this and other types of synthesis, describe its steps, and understand how these can affect the reliability of its findings.

What is a systematic review?

The U.S. Institute of Medicine defines systematic review as: "a scientific investigation that focuses on a specific question and uses explicit, pre-specified scientific methods to identify, select, evaluate, and summarize the findings of similar but separate studies" [5].

Systematic reviews follow structured methodologies to minimize the risk of bias in the evidence selection and analysis process, which is an essential difference from narrative reviews [6]. First, they have a protocol previously set up and prospectively registered in specialized databases such as the International Prospective Register of Systematic Reviews, PROSPERO [7,8,9]. A protocol explains in detail: the theoretical framework, the eligibility criteria and outcomes of interest, the search and selection of articles, the methods for assessing bias risk, and the synthesis and evaluation of evidence certainty [10,11].

What are the main advantages of using a systematic review as a source of information?

Reduces evidence selection bias or cherry picking

By performing a systematic and comprehensive search of published and unpublished literature, all relevant evidence is selected regardless of its results, avoiding the biased selection of studies. For example, selecting only favorable studies for a given intervention [12].

Greater precision in the results

When a quantitative synthesis is performed, the meta-analysis tool is used, where the results of different individual studies are combined, decreasing random error and increasing statistical power [13]. However, this gain in precision does not guarantee the validity of the results since other factors mentioned in the next point (certainty) must be considered.

It allows considering the certainty of the results

Considering the risk of bias, imprecision, inconsistency, indirect evidence, and publication bias, employing the Grading of Recommendations Assessment, Development, and Evaluation (GRADE) methodology, it is possible to estimate how much reliability we can assign to the results obtained [4,14].

It is important to note that other forms of systematic evidence synthesis are discussed in more detail in additional Medwave methodological notes [6]. These include overview reviews and evidence mappings [15], rapid reviews [16], living reviews (continuously updated) [17], network meta-analyses [18], and reviews of systematic reviews (or overviews).

Cochrane was one of the first organizations to produce and develop systematic reviews, aiming to provide summarized and reliable evidence that allows approaching an answer to the questions that constantly arise in healthcare field decision-making. Today, the Cochrane Library has the Cochrane Database of Systematic Reviews, a database with more than 8800 reviews, which comply with a rigorous methodology, adjusted over the years to ensure compliance with the updated quality criteria (described in the Cochrane Handbook and the Methodological Expectations of Cochrane Intervention Reviews, MECIR standards) [13,19]. Cochrane reviews contain Summary of Findings (SoF) tables that follow the GRADE methodology, which is easy to read and understand [14].

Other organizations such as the Campbell Collaboration (www.campbellcollaboration.org/), the Joanna Briggs Institute (jbi.global/), and the BEME Collaboration (www.bemecollaboration.org/), among others, publish systematic reviews, some of which are of a specific topic (e.g., education in the case of BEME). In addition to the Cochrane Handbook, other methodological guidelines for systematic reviews include the Centre for Reviews and Dissemination manual, the Joanna Briggs Institute manual, and guidelines from the Agency for Health Research and Quality (AHRQ) and the U.S. Institute of Medicine (IOM) [5,20,21,22].

Steps to conducting a systematic review

1. Formulation of the question, eligibility criteria, and outcomes of interest

The first step is to define the key elements of the research question, which is usually done by structuring the question in the PICO/PECO format (population, intervention/exposure, comparison, and outcome) [23,24] (Table 1). This format may vary depending on whether the question is therapeutic, prognostic, etiologic, diagnostic, or other (Table 2). A variant of the PICO question could be "PICOS" or "PICOTS", where the T indicates the follow-up time, and the "S'' would indicate the type of study (study design) or study context (setting). For didactic purposes, we will take a therapeutic question as an example for the development of this article.

After structuring the basic elements of the PICO question, the eligibility criteria and outcomes of interest must be established in detail. The eligibility criteria are based mainly on the elements of the PICO question. Following the above, for the PICO question: ''in women at risk of preterm delivery, the administration of prenatal corticosteroids decreases fetal, neonatal, and maternal morbidity and mortality?'' the eligibility criteria should consider the following questions:

-

Patient: women at risk of preterm delivery. What age range will be included? How is the preterm delivery risk defined? What definition of preterm delivery will we consider?

-

Intervention: prenatal corticosteroids. Are all drugs in this class included? In what dosage? By which administration route?

-

Type of study: Which study design will be included? Typically, randomized clinical trials are included in therapeutic questions, but observational studies could also be considered; the latter is more important in diagnostic or prognostic questions.

Finally, it is crucial to define the outcomes of interest. For example, the previous PICO question could include perinatal mortality, maternal mortality, risk of operative delivery, and labor pain.

Outcomes can be classified according to their relevance as: "critical," "important," or "unimportant" [28]. Clinically critical, or important outcomes are those that, if modified, would change the patient’s acceptance of the intervention. For example, a critical outcome would be perinatal mortality, and an unimportant outcome would be: pain at the site of corticosteroid injection. Reviews may also define "primary" and "secondary" outcomes, usually restricting more advanced analytical tests (subgroup and sensitivity analyses) to the first.

Outcomes can also be classified by whether they "directly" or "indirectly" measure the phenomenon under study. Surrogate or intermediate outcomes are understood as those that do not directly measure the phenomenon under study and therefore substitute a clinically relevant outcome [28]. For example, neonatal oxygen saturation is a surrogate or intermediate outcome to the clinical outcome of respiratory stress and perinatal mortality.

The Core Outcome Measures in Effectiveness Trials (COMET) initiative generated standardized collections of outcomes, called Core Outcome Sets, which correspond to the minimum outcomes that should be measured and reported in all condition-specific clinical trials [29]. Just as these outcomes are considered critical and relevant for decision-making in clinical trials, systematic reviews should use the same outcomes to synthesize evidence from such trials [30].

2. Bibliographic search and study selection

Once the PICO question and eligibility criteria have been formulated and structured, the next step is establishing the databases in which the literature search will be conducted. This is a key moment since an inadequate search can lead to a ripple effect in which the conclusions obtained in a review are inaccurate and unreliable. It is essential to define the following:

-

Information sources: systematic reviews usually use diverse and complementary sources for the search, including biomedical databases such as CENTRAL, MEDLINE/PubMed, EMBASE, CINAHL, PsycINFO, Scopus, and Web Of Science, among others [31]. Each has advanced specifications detailed in another methodological note of this series [32]. In addition, systematic reviews generally include gray literature search strategies using specific databases, reference searching, and expert consultation, among others [33].

-

The terms and structure of the search strategy: when structuring a question, we must identify the main concepts to design a search strategy that includes the terms relevant to our search and, at the same time, excludes those that may hinder the search. It is here where it will be necessary to use different tools available within each search platform for each database; we can mention the Boolean operators (OR, AND, and NOT) and the filters available within each platform (filter by year, type of study, language, and others) [31].

Another article in this methodological series details how to perform an exhaustive and structured bibliographic search [32].

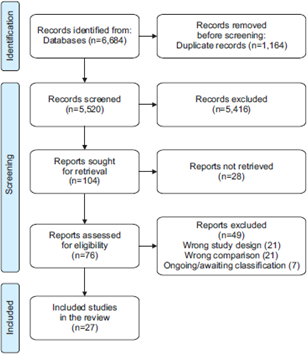

Once the search has been performed, different software such as Endnote, Covidence, Rayyan, and EPPI-Reviewer can be used for: bibliographic management, data deduplication, and selection process, in which authors must cross-check each article found against the pre-specified eligibility criteria. The process is usually carried out in two consecutive stages. Firstly, by evaluating the title, abstract, and then the full text of each article. Once the included studies have been selected, we proceed to extract the characteristics of the studies and the results of interest. Usually, this entire process is performed by two or more authors, both independently and blinded to the work of the other reviewer, to contrast and reduce the risk of errors during the process. The Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines provide a detailed methodology on how to report this process, including a type of flow chart, which makes it possible to graphically express the selection of articles (example in Figure 1) [34].

Example of PRISMA flow chart.

3. Critical assessment and result synthesis

Why is it important to critically appraise the included studies?

The certainty of the findings presented in a systematic review will depend on the biases inherent to the studies included in the review. Bias is a systematic error or deviation of the results from the truth, which is not determined by chance [35]. Although a low risk of bias is often considered equivalent to a high-quality study, the concept of quality is broader, and bias is a dimension of it. The standardized assessment of biases present in the studies allows us to have a notion of the reliability of the results, conceptualized and other evaluations, in the certainty of evidence using the GRADE methodology [14]. In turn, a correct evaluation of possible biases requires adequate reporting by the authors of the primary studies, following standardized reporting guidelines. For example, clinical trials should follow the Consolidated Standards of Reporting Trials, CONSORT, which provides a basic list of elements to report [36]. However, it should be noted that CONSORT is not a guideline for assessing the methodological quality or clinical trial biases but rather their quality of reporting.

How to assess the presence of bias in the included studies in systematic reviews?

Scales or checklists

One of the most widely used methods is scales or checklists. Scales score various quality components and then combine them to obtain a final score, while checklists consist of items or questions that are filled in as the checklist is completed. The limitation of these methods is that they assign a score or equivalent value to different aspects related to a study’s methodological quality, which may not be correct. For example, taking two methodological aspects of a clinical trial, a problem in randomization may be a major flaw compared to the lack of blinding of a study that evaluates objective outcomes. One of the most widely used scales for clinical trials, the Jadad scale, has such limitations [37].

Domain-based assessment

They consist of critical assessments made separately for different domains related to independent methodological aspects. Following the above example for clinical trials, methodological problems related to randomization are evaluated and interpreted individually from those related to blinding. Based on this, one can think independently about how these potential biases may affect the results. Table 3 summarizes the Cochrane RoB 2 tool for clinical trials. For observational studies, there are several tools, one of the most widely used being the Newcastle-Ottawa Scale. However, it has been recently replaced by ROBINS-I [39]. There are also several tools for diagnostic studies and study evaluation packages, such as those of the Joanna Briggs Institute and the United States Institute of Health (NIH) [40,41,42,43].

4. Results summary

Once the results of each study are extracted and a critical appraisal of potential biases is made, the data related to outcomes can be compared and synthesized based on statistical analyses. The most used method is meta-analysis, which allows the results of two or more individual studies to be combined. The fundamental objective of a meta-analysis is to increase the power and precision of the results, generating a point estimator where the results of the individual studies included are weighted. In some cases, it also allows us to answer questions not raised in individual studies (e.g., by subgroup analysis) and to explore inconsistencies (heterogeneity) in the findings. Various statistical programs are available to carry them out (e.g., RevMan, Stata, and R).

Although every meta-analysis should be performed in the context of a systematic review, not every systematic review has a meta-analysis since there must be minimum comparability between populations, interventions, comparisons, and outcomes of the included studies to perform a statistical combination of them. Furthermore, if no studies on the PICO question are found in the systematic review or only one study is found, it is impossible to perform the statistical aggregation of two or more studies. Other reasons for which a narrative synthesis of the numerical findings from a systematic review is often preferred include:

-

Very broad questions that incorporate scattered and non-comparable evidence.

-

Lack of statistical information from individual studies that prevent conducting a meta-analysis.

-

Differences in population, intervention, and comparison that prevent the comparison of study results (clinical heterogeneity).

-

The presence of important statistical heterogeneity in the meta-analysis that potentially invalidates its results [44].

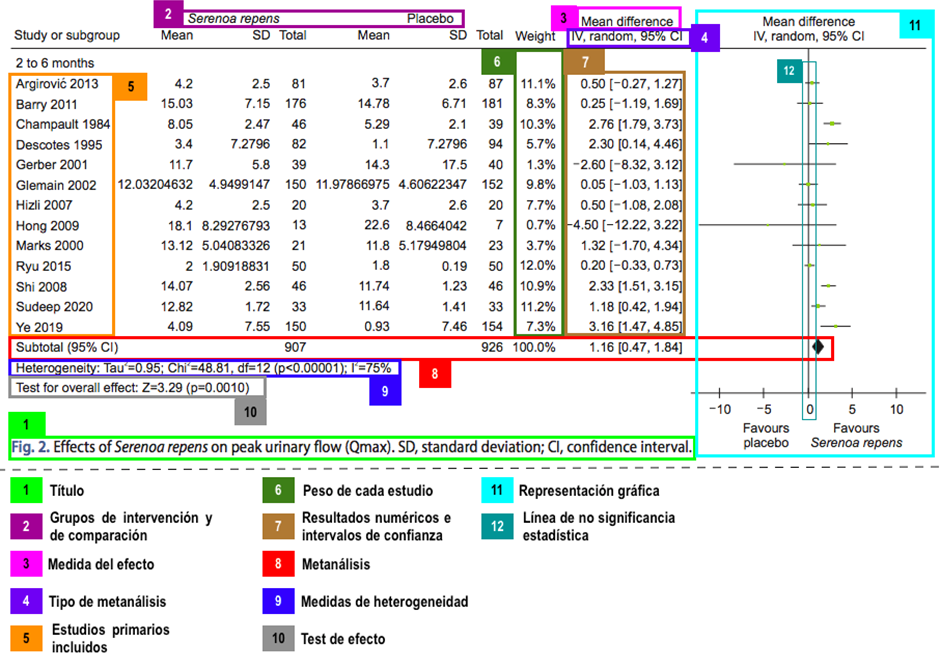

The forest plot corresponds to the graphical representation of a meta-analysis and has different information, detailed in Figure 2. When interpreting a forest plot, it is important to identify the following key elements:

-

Heterogeneity: the degree of similarity between the results of each study, assessed visually or with statistical measures.

-

Meta-analysis: the summary measure (represented by a "diamond") indicating the pooled effect and its confidence interval. These confidence intervals are relevant when interpreting them concerning the clinical significance thresholds.

Example of forest plot and its components.

Similar to other statistical tests, meta-analyses arise from assumptions that allow the combination of results. Founded on these assumptions, there are two types of meta-analyses:

-

Fixed-effect meta-analysis: this model assumes that there is a single effect that the different studies included in the synthesis estimate with some variability (random error). This model does not consider between-study variability as a source of heterogeneity, so it is used under the assumption of homogeneity between studies.

-

Random-effects meta-analysis: this model does not assume a single effect but rather multiple possible effects estimated by the included studies. In addition to integrating the variability due to random error, it considers the variability between studies and is regarded as the most conservative model since it does not assume homogeneity between studies and, in the presence of heterogeneity, produces less precise results [46,47].

These meta-analyses represented in forest plots are called direct or pairwise meta-analyses. There is a different approach called network meta-analysis, whose methodology allows using evidence from direct and indirect comparisons. The details of this type of study are described in detail in another article of this methodological series [18].

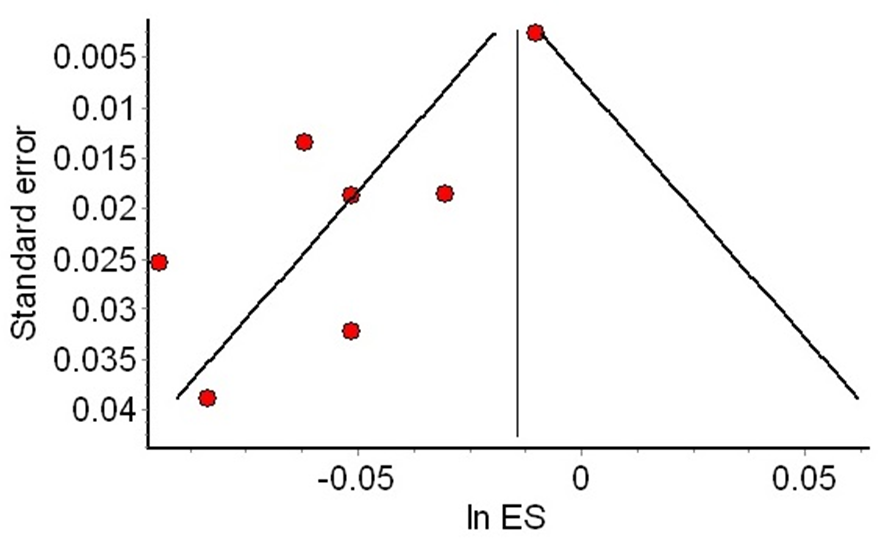

The funnel plot is an additional graphical representation of the effect estimates of individual studies, in contrast to their precision, so that at the base of the plot are the less precise studies (usually more dispersed around the meta-analysis), and at the top are the more precise studies, usually concentrated around the meta-analysis estimate (see Figure 3). When there is an asymmetric dispersion of small studies (small study effect), publication bias may be suspected. However, there are alternative explanations for this phenomenon, and its interpretation should be cautious.

Example of an asymmetric funnel chart.

Notes: Red dots of the smaller studies are asymmetrically distributed to the left of the meta-analysis (vertical line). In contrast, the more precise studies are at the top of the funnel, close to the meta-analysis (red dot at the upper right).

5. Formulating conclusions in systematic reviews

Considering the multiple steps of a systematic review, the strengths and weaknesses of the synthesized body of evidence should be identified when formulating conclusions. Due to multiple systems for classifying these strengths and weaknesses, the GRADE methodology was developed to standardize this assessment. To this end, GRADE categorizes the certainty of the evidence into four levels (high, moderate, low, and very low). This certainty is defined by the methodological design of the primary studies included and by the factors that can increase or decrease the level of certainty, among which are:

-

Risk of bias.

-

Inconsistency.

-

Indirect evidence.

-

Imprecision.

-

Publication bias.

This methodology has been detailed in another article of this methodological series [14]. Although the GRADE methodology is used to determine the certainty of evidence and generate recommendations, it is important to note that systematic reviews alone are insufficient to generate a health recommendation [4].

Some limitations and problems of systematic reviews

Despite their many advantages for evidence-based decision-making, systematic reviews, like any other scientific study, are not free of problems:

-

Low methodological quality: multiple scales and lists allow us to evaluate the methodological quality of systematic reviews. Most of these focus on assessing transparency, internal and external validity, and risk of bias, among other aspects. Systematic reviews may have the quality of their evidence affected due to multiple shortcomings in their structure and analysis, such as a limited search strategy, unsatisfactory assessment of the risk of bias, or unjustified exclusion of primary studies. Therefore, it is essential to analyze the systematic reviews available critically.

-

Redundancy and duplicity: there are overlapping systematic reviews, which do not provide new information since they use a large percentage of primary studies previously used in other systematic reviews as a source.

-

Garbage-in/Garbage-out: a systematic review of good methodological quality is not exempt from including poor-quality primary studies (garbage-in). This means that the synthesis result of poor-quality studies will provide results of low certainty (garbage out) [48].

-

Problematic studies: reviews may be subject to other problems, such as the inclusion of fraudulent studies and conflicts of interest of the authors that could bias the findings [49]. As with the other issues to which systematic reviews are subject, strategies have emerged to mitigate them, such as stricter editorial policies regarding conflicts of interest in authors of systematic reviews [50,51].

-

Lack of updating: It usually takes a long time to conduct a systematic review, making it difficult to include recent primary studies [52]. Some of the methodological advances that could counteract this phenomenon include: living systematic reviews, which have continuous periodic updates and are described thoroughly in another article of this methodological series [17].

-

Conflicts of interest: in the same way that the presence of conflicts of interest in the authors or funders of a primary study can alter and bias the results, systematic reviews are also susceptible to this type of influence [53]. This is why Cochrane has one of the strictest conflict of interest policies compared to other journals, which guarantees the independence of study evaluations [51].

As users of systematic reviews, it is important to use tools to identify some of these problems. The best-known tools are PRISMA [34] for report quality, AMSTAR 2 [9] for methodological quality, and ROBIS [54] for risk of bias assessment.

Conclusions

Systematic reviews are a type of research design that identifies, compiles, critically analyzes, and synthesizes the available evidence, facilitating the use of evidence for decision-making by health professionals. Additionally, having a protocol based on a structured methodology for its elaboration allows the reduction of biases during the synthesis of information. Therefore, knowing systematic reviews' structure, steps, and limitations will enable us to read them critically and comprehensively. This is where tools become essential to identify and assess these problems.