Methodological notes

← vista completaPublished on January 5, 2021 | http://doi.org/10.5867/medwave.2021.01.8090

Rapid reviews: definitions and uses

Revisiones rápidas: definiciones y usos

Abstract

This article is the first in a collaborative methodological series of narrative reviews on biostatistics and clinical epidemiology. This review aims to present rapid reviews, compare them with systematic reviews, and mention how they can be used. Rapid reviews use a methodology like systematic reviews, but through shortcuts applied, they can attain answers in less than six months and with fewer resources. Decision-makers use them in both America and Europe. There is no consensus on which shortcuts have the least impact on the reliability of conclusions, so rapid reviews are heterogeneous. Users of rapid reviews should identify these shortcuts in the methodology and be cautious when interpreting the conclusions, although they generally reach answers concordant with those obtained through a formal systematic review. The principal value of rapid reviews is to respond to health decision-makers’ needs when the context demands answers in limited time frames.

Main messages

- Rapid reviews are similar to systematic reviews yet take shortcuts to reduce the time of production.

- Rapid reviews have no standardized definition nor methodology.

- Rapid reviews are used by health decision-makers, both for clinical practice and public policy design.

- Rapid reviews are used by health decision-makers, both for clinical practice and public policy design.

- Rapid reviews are particularly useful in time and resource-restricted scenarios.

Introduction

Systematic reviews are currently considered the best option to offer informed decisions[1] for clinicians and health decision-makers due to their ability to synthesize relevant scientific evidence on a subject, with high methodological standards in their process. However, the process is expensive and slow and can take over three years to complete[2], which can delay the questions posed for a long time, potentially affecting clinical decision-making and health policy design.

Rapid reviews arise to synthesize the knowledge with the components of a systematic review but are simplified or omitted to produce information in less time and fewer resources to meet the needs of decision-makers[3],[4]. These simplified processes are known as “shortcuts” and, while they could decrease reliability in the conclusions[5], studies concluded that, especially in therapeutic interventions, they do not change the result drastically[6],[7].

Without a universal definition, rapid reviews are presented as a heterogeneous set of products without standardization in their production[8]. Neither it is known which shortcuts would cause less impact to their quality[9]. There is an agreement to consider a rapid review as requiring from one to six months for production according to the needs of decision-makers[10], which has increased their demand and led organizations such as the World Health Organization to use these methods for the preparation of guides in a limited time[11],[12], and the Cochrane Collaboration to establish a working group on rapid reviews in 2015.

This article corresponds to the first in a methodological series of narrative reviews on general topics in biostatistics and clinical epidemiology that explore and summarize, in an accessible language, published articles available via the main databases and specialized consultation texts. The series is aimed at the training of undergraduate students. The Evidence-Based Medicine department from the School of Medicine of the Universidad de Valparaiso, Chile, in collaboration with the Research Department of Instituto Universitario Hospital Italiano de Buenos Aires, Argentina, and the Evidence Center UC, of the Universidad Católica, Chile have worked on the series. The main purpose of this article is to introduce the concept of rapid reviews, their similarities and differences with systematic reviews, and their usefulness as a tool to synthesize evidence with fewer resources.

General concepts of systematic reviews

Systematic reviews are the best evidence-synthesis design to achieve reliable conclusions[13], especially when designing public policies or clinical practice guidelines[14].

A good quality systematic review should have an explicit protocol established “a priori” and ideally kept unchanged during the process, which will allow evaluators to reproduce the process and make a critical analysis of its methodology[5]. This protocol should be enrolled in a specialized registration database, such as PROSPERO, or published in scientific journals.

The research question must be well structured (in PICO format)[15], clinically relevant, and possess a scope and complexity determined by the authors according to the needs of stakeholders[8]. The search strategy should be comprehensive and have high sensitivity, covering multiple electronic databases with published studies, documents not yet published in conventional channels (grey literature), journal publications or scientific societies, and sometimes even reaching out to experts to look for ongoing studies. This search requires many resources to access eligible studies[9].

The inclusion and exclusion of studies and the critical assessment of the risk of bias includes at least two independent reviewers[15]. Therefore, the time it takes to complete the process depends on the amount of evidence found, the studies selected by the reviewers that require full-text evaluation, and the extraction of their results.

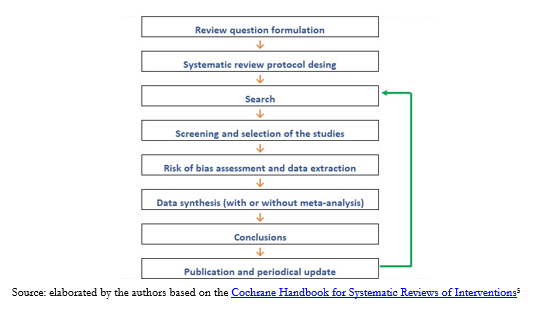

We can outline the process in the following steps (Figure 1):

Full size

Full size It is then necessary to know what stages of a systematic review can be simplified and what implications these shortcuts have in the conclusions achieved[13].

Rapid reviews

Currently, rapid reviews are used to develop public health policies in the Americas and Europe[5],[16]: health technology assessment reports conducted in Latin America are examples of the rise of this type of methodological design. Locally, the Epistemonikos[17] and LOVE[18],[19] initiative stand out as facilitators in the search for rapid reviews[20]. The ability to balance an abbreviated process with sufficient methodological rigor results in rapid reviews becoming a real alternative to evidence synthesis operating on moderate information confidence within limited timeframes[10].

Nonetheless, the authors must explain the methodological limitations and the consequent risk of design bias[21],[22]. Readers of these abbreviated syntheses should be cautious when analyzing the methodology and the shortcuts used by reviewers, taking into account the authors’ confidence in their conclusions[6].

Due to the lack of consensus on defining them, rapid reviews are challenging to identify if their titles are not explicit. Most of them are found in the grey literature[5]. Also, because they are directly commissioned by stakeholders such as ministries of health or policy-makers[5], many are not in the public domain.

The shortcuts used by the authors of the different published rapid reviews are inconsistent, which creates a high heterogeneity in this type of evidence synthesis. Further studies are needed to properly assess which parts of a systematic review process can be simplified to have the least impact on their conclusions[10]. However, when a rapid review includes at least ten studies, it is less likely to lead to different conclusions than those achieved from a traditional systematic review[6].

As mentioned, rapid review methods are not standardized. Notwithstanding, PRISMA, a project that seeks to standardize the reporting of systematic reviews, has been updating and incorporating formats such as rapid reviews since 2018[23].

Review record

While databases such as PROSPERO accept the registration of rapid review protocols, not all consider this step in their production[21]. Failure to register or publish the protocol increases the risk of reporting bias[15].

Review question

Rapid reviews use a question with a PICO structure but should have a specific scope and not become a quick alternative to a systematic review[24]. The question must be specific or have a limited scope considering the stakeholders’ objectives[10]. The objective will largely determine the time the review takes. Some topics beyond the reach of rapid reviews require multiple or complex interventions[8].

Bibliographic search

As a first step, authors can look for a high-quality systematic review to avoid duplication of effort and redirect to a quick update of the review; for example, the authors can search from the review’s search date a small number of databases. This abbreviated search can be carried out in one of the leading electronic bibliographic sources, with PubMed/MEDLINE being the most widely used[25] given that it includes, on average, more than 80% of the studies usually included in systematic reviews[6].

Other shortcuts used to simplify the search include:

- Removing manual search for evidence (non-electronic sources) and gray literature[5].

- Limiting the search to studies in specific languages[5],[26], for example, only studies published in English.

- Limiting the studies’ publication date search for publications in the last ten years, for example[5]. This shortcut is the most used by published rapid reviews[21].

- Limiting the design of eligible studies by, for example, by limiting the search to randomized clinical trials[8].

These shortcuts may increase the risk of publication bias and selection bias[6],[10].

Study selection and data extraction

Authors may decide not to perform duplicate data collection and extraction[10]. This decision can speed up a rapid review; however, both steps will have a higher risk of error and bias[27],[28],[29]. Another shortcut used in this step is to include fewer outcomes when selecting the studies[9], for example, to consider only mortality as a relevant outcome. One disadvantage of this shortcut is that assessing potential selective reporting of outcomes of a primary study is lost.

Risk of bias assessment

Some rapid reviews omit the risk of bias assessment as a shortcut; however, this is not recommended due to the high impact on the reliability of the conclusions[30],[31]. By not conducting a critical appraisal, the quality of the evidence on which the conclusions are formulated is unknown[32],[33].

Synthesis of the evidence

Rapid reviews may choose not to conduct a meta-analysis, and only narratively describe quantitative findings; in fact, more than two-thirds of rapid reviews present their results this way[21].

Conclusions of a rapid review

Because available evidence is limited, few studies have evaluated the robustness of rapid reviews[9]. Some studies have found that the conclusions of rapid reviews do not differ substantially from a traditional systematic review[5],[24]. Due to the limitations that shortcuts may cause, the conclusions’ reliability is lower[9], and thus they must be interpreted by their consumers. Reliability will also depend on the quality of the studies included in each rapid review[32],[33].

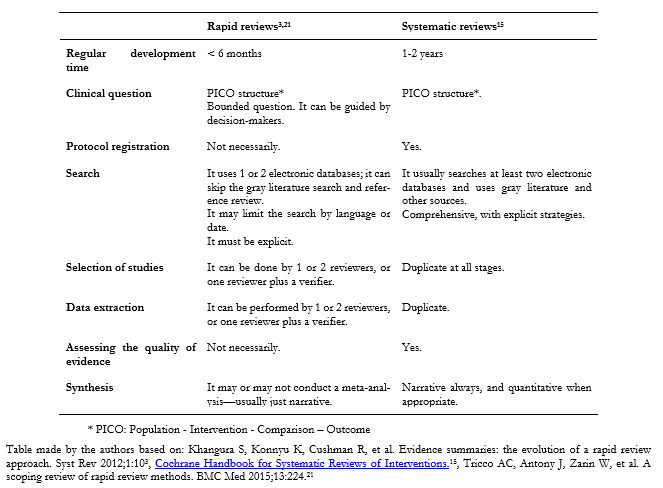

Below is a comparative table with the main differences between a systematic review and a rapid review (Table 1).

Full size

Full size When is a rapid review helpful?

Considering all of the above, it is clear that rapid reviews’ methodological design is not appropriate in all contexts. Below we mention some circumstances in which they are:

- When there are time restrictions for conducting a synthesis of evidence, as in health contingencies such as the one presented during the COVID-19 pandemic[4].

- When there are resource restrictions, whether economic or human, to carry out a synthesis of evidence on a specific topic.

- Health centers that evaluate their service beyond healthcare, such as comparing the amount of litigation derived from their services with rates presented by other local or international centers[34].

- To evaluate new evidence on a topic when there is a broad consensus.

- And, in general, in any case where decision-makers—clinicians or health policy-makers—can act based on a less reliable synthesis of the evidence to respond to tight timeframes[10].

Some limitations of rapid reviews

It must be noted that a systematic review can be carried out quickly, maintaining the methodological rigor that characterizes it[35],[36] without using shortcuts in its methodology. This is one of the main criticisms of the methodology of rapid reviews because today, technological tools allow for optimizing the human resource and streamlining many of the processes of a systematic review[37],[38]. If there is access to sufficient resources (human, technological, economic), it would be best not to take shortcuts. Another criticism of rapid reviews is that they cannot address very specific clinical questions[30].

On the other hand, there are currently no standardized tools to evaluate the methodological quality of rapid reviews in contrast to tools such as ROBIS[39] and AMSTAR-2[40] that evaluate the methodological quality of systematic reviews.

Conclusions

Rapid reviews are abbreviated synthesis of evidence that contemplate the essential steps of a traditional systematic review but simplify or omit steps in the process to achieve results faster and with fewer resources. They do not have a consensus definition, and to date, there are no guidelines used widely, which makes them methodologically heterogeneous. The quality of rapid reviews depends directly on the shortcuts that have been implemented and how they may affect the results.

Rapid reviews are becoming more common due to their ability to answer specific questions within six months; however, they should be interpreted critically due to the methodology’s limitations. Authors should explain their methods, list the shortcuts used, and warn of possible biases present. With this in consideration, rapid reviews generally achieve results consistent with those obtained by a traditional systematic review, although with lower reliability.

Rapid reviews are booming, and the design will likely be standardized in the coming years. Rapid reviews may become one of the best tools to answer specific and relevant questions in health decision-making.