Estudios originales

← vista completaPublicado el 29 de noviembre de 2022 | http://doi.org/10.5867/medwave.2022.10.2654

Estudio exploratorio de las citaciones en revisiones sistemáticas de ensayos aleatorizados publicados en revistas latinoamericanas

An exploratory study of citations in systematic reviews of randomized trials published in Latin American journals

Abstract

Introduction The prevalence of inclusion of randomized controlled trials published in Latin American journals has not been evaluated yet. This study explores the extent to which randomized trials published in Latin American medical journals are cited and used in systematic reviews.

Methods We did a descriptive observational study on randomized trials published in MEDLINE-indexed Latin American journals from 2010 to 2015. The primary outcome was the inclusion of these trials in systematic reviews. The secondary outcome was the total number of citations each trial received, as reported by Google Scholar.

Results Twenty-nine journals were selected. After searching these journals, we found 135 trials that fulfilled the inclusion criteria accounting for 2% of all research articles published in these journals. Of these, 55 (41%) were included in 202 systematic reviews. Of the nine most-cited randomized trials by systematic reviews and meta-analyses, only two were published in Spanish. Nine received zero citations by any article type. Most had small sample sizes.

Conclusions The overall impact of randomized controlled trials published in Latin American journals is low. Little funding, language bias and small sample sizes may explain the low inclusion in systematic reviews and meta-analyses.

Introduction

Systematic reviews (SRs) of the evidence on the benefits and risks of medical interventions have become the cornerstone of evidence-based medicine. They can influence decision-making in clinical practice and public health medicine, identify areas where more research is needed and guide resource allocation [1]. The methodological quality also depends on whether the most relevant studies are included [2,3,4]. To minimize bias due to selective data availability, authors of systematic reviews of healthcare interventions should identify as many relevant randomized studies as possible to provide reliable evidence to base healthcare decisions [5,6,7].

The main challenge is to identify all relevant studies. Complete identification, even of published reports of controlled trials, remains challenging. For example, electronic searching of controlled trials in the National Library of Medicine’s PubMed/MEDLINE database usually retrieves only half of the relevant studies [5,7]. Many of the missing articles are included in PubMed/MEDLINE but may be inaccurately indexed [5], while others are in non-MEDLINE journals, underscoring the importance of hand searching [8,9].

However, the dissemination of medical evidence, including randomized controlled trials (RCTs) results, is influenced by several factors that affect the likelihood of inclusion in a meta-analysis [10,11,12]. Researchers working in non-English-speaking countries often publish their findings in national journals. When their results are important or novel, they more frequently submit them to English-language journals [11]. The result may be an English-language bias in systematic reviews and meta-analyses if they are based exclusively on reports written in English. Nonetheless, the importance of this shortcoming in meta-analytic evidence synthesis is not yet evident [13].

Systematic bias due to the selection of studies in a particular language is called language bias [8]. The Cochrane Collaboration recommends extensive literature searches covering all relevant languages to prevent language bias [14]. Some studies have suggested that excluding trials reported in languages other than English may introduce bias resulting in an overestimation of the treatment effect [10,11,12,15], which may lead to inappropriate health policies and patient care decisions [10]. In this context, the impact of RCTs published in Latin American journals, as determined by SR inclusion, has not been evaluated. Consequently, this study aims to explore the extent to which RCTs published in Latin American medical journals indexed in MEDLINE are cited and used in systematic reviews either in the narrative evidence synthesis or the meta-analysis. Secondary outcomes are the total number of accrued citations and the reasons for non-inclusion in the meta-analyses.

Methods

We did a descriptive observational study on RCTs published in MEDLINE-indexed Latin American journals from 2010 to 2015 to explore the impact of these trials on systematic evidence synthesis.

We created a database of all Latin American medical and dental journals with a current indexation in MEDLINE at the time of data retrieval. We chose MEDLINE as the journal source for the RCT retrieval to ensure a standard quality threshold for all journals. We included general medicine, medical specialties, and dentistry journals with a reported country of origin in Spanish-speaking Latin American countries. Brazilian journals were excluded from our study. Then, we listed the journals alphabetically by country of origin, divided them into three groups, and assigned each group to one of three data extractors (JC, EK, JL).

To retrieve the RCTs, we searched the volumes of each journal on the official websites. Then, each data extractor hand-searched the table of contents of each assigned journal to find the RCTs. First, we screened by title and abstract in any section called ‘Original Articles,’ or any equivalent term. The full text was only perused if there were doubts about the study design. Each journal was assessed by two reviewers in parallel and independently. Discrepancies were resolved by discussion and consensus, and when this was not possible, a fourth experienced reviewer (JV) decided if the article should be included or not.

We included any original study reporting a randomized controlled design, published between 2010 and 2015, years inclusive, in English or Spanish. We considered the latter cutoff date, not more recent, as appropriate for an RCT to be cited or included in any forthcoming systematic review. Small pilot studies were also selected if they followed a randomized design. We excluded quasi-experimental studies, observational studies, narrative reviews, clinical guidelines, consensus statements, conference proceedings, protocols, and letters to the editor.

We uploaded the RCTs to Zotero to produce a master list by publication date. The metadata associated with each digital object identifier (DOI) was established as the reference information for the citation searches. Retrieving the metadata was necessary because the article titles were generally published in Spanish while the metadata was in English, so, for search purposes, we used the metadata version as shown by Zotero.

The RCTs were divided into thirds by title, and each third was assigned to a reviewer. To examine whether the RCTs were included in systematic reviews, each reviewer searched the title in Google Scholar and browsed all citations reported for the article. We chose this data source because of its potential to provide comprehensive scientific and academic citation information besides having a user-friendly design.

We did our search for citations in systematic reviews from June 26 to 28, 2021. We excluded any citations that did not have a systematic review design reported in the title, abstract, or main text. Integrative reviews, scoping reviews, and systematic review protocols, were not eligible for citation counts. We did not exclude network meta-analyses.

Some systematic reviews are published in multiple languages or repositories other than the original journal, thus generating duplicate citations. When this occurred, we used the version where the systematic review was initially published.

Our primary outcome was the inclusion of our population of RCTs in systematic reviews with or without meta-analysis. The secondary outcomes were a) the total number of citations that each RCT received as reported by Google Scholar and b) in the case that an RCT was not included in the meta-analysis, the reasons for this exclusion.

For the journals, we extracted the name, country, International Standard Serial Number, and website as informed by the National Library of Medicine journals catalog.

For each RCT, we extracted the article title, first author’s last name, country of affiliation of the first author, journal title, specialty, country of publication, publication language, year of RCT publication, DOI or PMID, the number of accrued citations according to Google Scholar for any article type, the number of systematic reviews that cite the RCT, sample size, type of funding, and the link to each RCT.

We extracted the following data for citing systematic reviews: title, year of publication, country of the first author, and access link.

We ascertained whether the RCT was included or not in the evidence synthesis or the meta-analysis. If applicable, we sought out the reasons for exclusion from the meta-analysis.

Before the final data extraction, we conducted a pilot to test the process. Two RCTs were randomly assigned to each of the three reviewers to extract the data in parallel and independently. The data extraction form was adjusted according to the findings of the pilot run-in.

Once all the RCTs were extracted and the corresponding citations identified, we did a second round of extraction with different reviewers to detect errors. If discrepancies were found, they were discussed and decided by consensus.

Since we studied all the RCTs of the included journals, no sample size calculation was required. Results are reported with descriptive statistics appropriate for each variable type: frequency distribution tables and statistics calculations. No imputations were done on the database.

Results

On December 7, 2020, we searched MEDLINE for Latin American medical journals and found 29 that fulfilled the inclusion criteria. The journals were based in Argentina, Chile, Colombia, Mexico, and Peru, and 16 (55%) had an impact factor, but none were included in quartiles 1 or 2 of the Journal Citation Report ranking (Table 1).

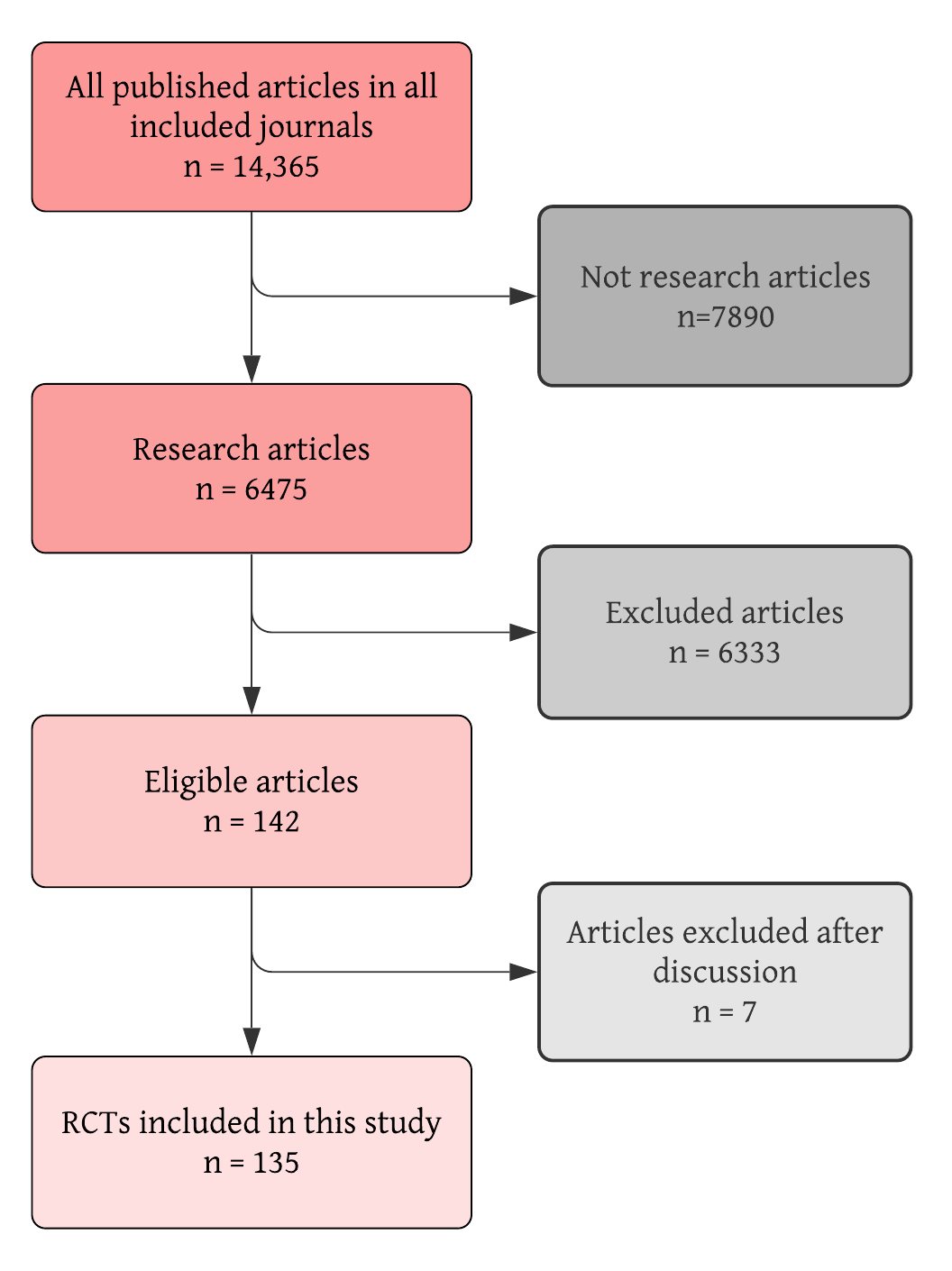

These 29 journals published 14,365 articles between 2010 and 2015, years inclusive. We excluded 7890 because they did not report original research findings. Of the 6475 research articles, 142 met the inclusion criteria and were deemed eligible for our analysis. Finally, 135 were included in this study due to having a randomized controlled design, thus accounting for 2% of all research articles published in these journals (Figure 1).

Identification, screening and selection process for the included RCTs.

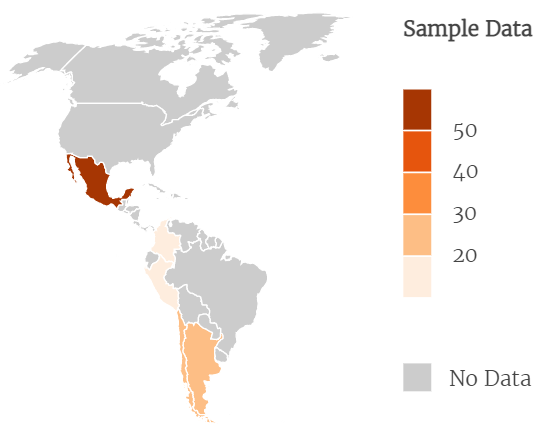

The complete list of the included RCTs is available as supplementary material. Of the 135 RCTs, 99 were published in Spanish and 36 in English. Only seven RCTs involved dentistry. The population of RCTs was also roughly evenly distributed by study year, with 2012 having 29 RCTs and 2013 having 19. The countries with the highest number of RCTs were Mexico with 62, Argentina with 28, and Chile with 24. Figure 2 shows the RCT distribution by country.

Country distribution of the RCTs included in the study (N = 135).

The median sample size of the RCTs was 64, with a minimum of 8 participants to a maximum of 946. Twenty-five RCTs disclosed a public funding source, 74 did not provide a funding statement, six declared no funding, and 30 reported industry or private sector funding.

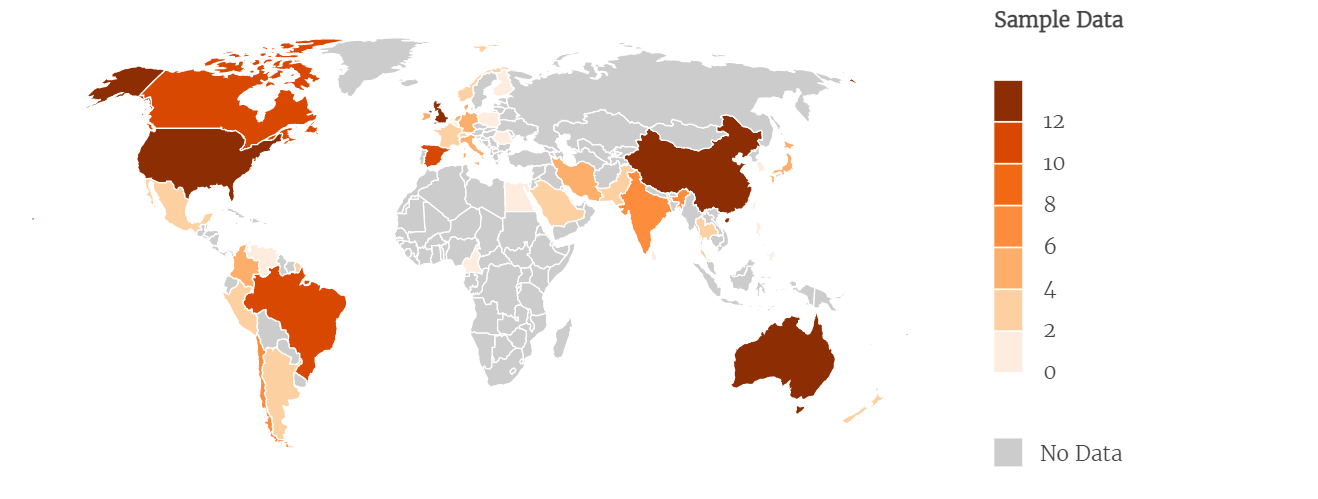

Two hundred-two systematic reviews cited 72 RCTs in any part of the article, and the maximum number of citations for a given RCT was 27. As per the first author, the systematic reviews that cited were from China (34), the United States (22), Australia (18), the United Kingdom (17), Spain (11), Canada and Brazil, with ten each, and 80 were distributed among 36 other countries (Figure 3).

Country distribution of the systematic reviews that cite the RCTs included in the study (N = 202).

Only 55 RCTs (40.7%) were included in the narrative evidence synthesis or meta-analysis of at least one systematic review. Of these, 45 were included in a meta-analysis, and eight were included only in the narrative evidence synthesis. The main reasons for exclusion from the meta-analysis were incomplete data (2), RCT wasn’t appropriate for meta-analysis (3), high risk of bias (1), and not specified (2) (Table 2). Sixty-two percent of the included RCTs were cited by one or two SRs, as shown in Table 3. Notably, of the top-ranking nine RCTs with the most citations by systematic reviews, only 22% (2) were published in Spanish (Table 4).

Of the 202 citing systematic reviews, 145 included an RCT for evidence synthesis, of which 47 did so in the narrative evidence synthesis and 98 in the final meta-analysis. In the case of four systematic reviews, we could not establish whether the RTCs were included or not due to poor reporting. We contacted the corresponding authors of these systematic reviews to obtain this information, and none responded.

Nine RCTs received zero citations by any article type. One RCT accrued 248 citations, and the average number of citations per RCT was 14.

Discussion

After searching MEDLINE, we found 29 medical and dental journals in which we identified a total of 135 trials that fulfilled the inclusion criteria, accounting for 2% of all research articles published in these journals. Of these, 55 (41%) were included in 202 systematic reviews. Of the nine most-cited randomized trials, only two were published in Spanish. Nine received zero citations by any article type, and most had small sample sizes.

Since the inception of systematic reviews in the early nineties, reviews have frequently limited the search to include only English-language trials, which led to coining the term ‘language bias’ for the effects that could ensue when excluding non-English language RCTs. In 1995, Gregoire et al. redid 28 meta-analyses that had restricted for language to include non-English articles and found that at least one would have reached a different conclusion [10]. This study also found—likely for the first time—that an RCT was more likely to be published in an English-language journal if the results were statistically significant. In 1997, Egger et al. matched German and English-language RCTs for first author and year of publication to assess if there were discordances in the level of significance, finding that one-third of the German RCTs reported significant differences, far less than the English language papers [11], thus setting the stage for introducing the concepts of publication bias and its derivative, language bias, which was later fully conceptualized by Song in a pivotal review article [16].

Knowing that statistically significant results found their way more easily into English-language journals, in 2002, Juni et al. [17] searched Cochrane for reviews without language restrictions and compared the treatment estimates from non-English language trials to English-language trials and found that non-English language trials were more likely to produce significant results but with lower methodological quality and fewer participants, and that excluding the non-English language trials had little effect on the summary treatment effect estimates. The median of participants in non-English language trials was smaller, a finding that was corroborated in a more recent study [18]. Our study reports a median sample size of 64, consistent with these early reports.

Moher et al., in 1996, compared RCTs reported in English to those in French, German, Italian and Spanish and found no differences in the completeness of reporting or the methodological quality [15]. In 2000, another study examined whether language-restricted meta-analyses had different effect estimates and found no differences with language-inclusive ones [19]; hence, no evidence for language bias. And finally, in 2003, another study looked at differences in effect estimates between language-inclusive SRs for conventional medicine versus alternative medicine [20] and found that leaving out RCTs published in languages other than English for alternative medicine resulted in substantial bias in the results.

More recent results are still conflicting. While some authors conclude that limiting reviews to only English could result in biased effect estimates [21], especially in complementary and alternative medicine [18,22], others found that excluding non-English language publications from systematic reviews on therapeutics had a minimal effect on overall conclusions. A 2020 study by Nussbaumer-Streit et al. [21] randomly selected 59 Cochrane reviews with no language restrictions: For the reviews that had included non-English language studies, their exclusion did not alter the size or direction of the effect estimates of statistical significance, partly because the majority of the excluded studies had small sample sizes. Another study from China evaluated language and indexing biases among Chinese-sponsored RCTs [22]. Among 470 RCTs, 78% were published in English-language journals and were more commonly positive trials, suggesting that language biases may lead to biased estimates of interventions in evidence synthesis.

Aside from language bias, other findings from this study should be analyzed considering previous studies. A recurring theme in the literature is the underrepresentation of research from developing countries. It is a well-established fact that most publications originate in the US and Europe. According to Langer et al. [23], less-resourced countries have poor research production, poor preparation of manuscripts, poor participation in publication-related decision-making processes, and journals may be biased. More recently, efforts have been made to offset this blatant asymmetry, such as evolving international research collaborations and more inclusive editorial boards. The low number of RCTs we found in the corpus of original articles published in the journals included in our study may also be explained by these shortcomings, essentially due to a variable level of expertise in clinical research among Latin American investigators.

Nonetheless, the problem of the under-citation of other-language papers is still present. A pointy letter to the editor complained that peer reviewers dismiss references to articles not published in English [24], a problem that could be offset by editors being more proactive in seeking out multi-lingual peer reviewers. A survey of 49 Colombian researchers in biological sciences showed that publishing in English represents substantial costs in time, finances, productivity and anxiety [25]. The global gap in science is also related to the hegemony of the English language and First World publishing groups [26].

The impact of non-English language journals has also been studied. Similarly to our study, moderate international recognition was found for a cohort of observational studies in psychiatry from Brazilian, German, French, Italian and Polish journals—only 50% were cited in the two years following publication [27]. A 2017 study of six natural science journals from five countries that publish both in English and not in English found that articles in English have higher citations even after adjusting for journal effect, year of publication, and paper length [28].

Our study only included MEDLINE journals, and given that all systematic reviews incorporate searches in PubMed/MEDLINE [29], the likelihood of our RCTs being identified is high. While this might be seen as a design bias of our research as much as it excluded non-MEDLINE indexed medical journals of the region, the uptake of these indexed, locally published RCTs remains low. If our populations of RCTs can be retrieved, then their scant impact could be explained by language bias and the quality of the research.

We were also surprised by the unexpectedly low proportion of randomization among all research articles published in these MEDLINE-indexed medical and dental journals—only 2% of all research articles for the six-year study period. This finding may be explained because roughly 60% of the RCTs included for analysis in this article did not report on funding sources or reported having none. Low regional investment in research and development [30] is partially responsible for this. When funding is available, authors tend to be institutionally required to submit their manuscripts to high-impact journals.

Some Latin American medical and dental journals striving to increase visibility are now receiving submissions in English or Spanish. However, there is a price to be paid, in that local audiences may be estranged if they are not fluent in English. While science going global has meant that it has also gone to English, moving the journal to an all-English language publication might not be desirable for most journals of non-English speaking countries, especially because fostering a clinical trial culture is also a function of educating the local Spanish-speaking physician community. Ultimately, journals may move to a bilingual publication where the manuscript preparation and peer review are done in Spanish, but the final report also comes out in English in the context of a bilingual publication.

Our study has limitations. Since it was an exploratory, descriptive study, we did not include a comparison group. Further research could use our data to set up a comparison set of RCTs published in English but coming from other regions of the world, such as low-income English-speaking countries. Our study was limited to assessing the impact of RCTs based on inclusion or non-inclusion in systematic reviews or meta-analyses. There is no standardized way of defining how randomized research impacts our patients and communities. We used inclusion in systematic reviews as a proxy for this impact. Another way of assessing impact is the extent of RCT usage in clinical guidelines. While interesting, this fell beyond the scope of our study. We are aware of efforts to map Chilean clinical research to elaborate evidence gap maps of local research [31]. We are unaware of other studies of this kind in the region.

To the best of our knowledge, no previous studies have assessed the impact of Latin American RCTs on evidence synthesis. Our search for RCTs in the included journals was thorough, quality-checked and included dentistry. While we only searched for citations in Google Scholar, we did so to expand the sensitivity of the identification of citing systematic reviews as much as possible.

We overcame the ambiguity related to article titles appearing in English and Spanish by only using the metadata associated with the digital object identifier, nearly always the English version. Notwithstanding, we cannot know if the citations related to the Spanish version of the title in Google Scholar significantly differed from the citations in the English version of this search engine. For similar reasons, we had to exclude RCTs published in Portuguese, thus limiting the generalizability of our findings to Brazil, a country that significantly contributes to regional scientific output.

Conclusions

RCT output in Latin American journals is extremely low. The overall impact of these RCTs is moderate, at best. RCTs published in English in local journals are cited more at the top echelon of citations but not at the lower ones.

Little funding, language bias and small sample sizes may explain the low inclusion in systematic reviews and meta-analyses.

While our findings are disheartening, they should prompt regional policymakers, funders, editors, and clinical investigators to introduce systematic interventions to improve the quality of locally conducted RCTs.